Week 7 Notes

STAT 251 Section 03

Monday, Feb.19th 2024 - NO CLASS

Lecture 13 Wednesday Feb. 21st 2024

Common discrete distributions

We can think of a probability distribution as a model of a particular random process. Some types of random variables are commonly encountered and thus common models of these random variables have emerged to describe their probability distributions. Below we will introduce a few of the most commonly used probability distributions for discrete random variables.

The Bernoulli distribution

The Bernoulli distribution, named after Swiss mathematician Jacob Bernoulli, is the probability distribution of a discrete random variable with two possible outcomes \([0, 1]\) where the outcome \(X = 1\) occurs with probability \(p\) and the outcome \(X = 0\) occurs with probability \(1-p\). This random variable can be used to model any binary random process like flipping a coin, or any categorical variable with two categories such as male or female. The probability mass function - which we can think of as the function which assigns probabilities to different outcomes of random variable - is given below

\[ P(X) = \begin{cases} p & \ \text{if} \ X = 1 \\ (1-p) & \ \text{if} \ X = 0 \end{cases} \]

The Bernoulli distribution has a single parameter \(p\) which is referred to as the “probability of success” - referring to the probability that the outcome we are interested happens - such as getting Heads in a single flip of a coin. We usually denote that a random variable \(X\) follows a Bernoulli distribution mathematically as \[ X \sim Bernoulli(p) \]

In probability theory, the term expected value is often used instead of mean or average however they technically mean the same thing. The expected value of a probability distribution for a random variable \(X\) is denoted mathematically as \(E[X]\). The Bernoulli distribution has the following expected value and variance:

\[ E[X] = p \] \[ Var[X] = p(1-p)\]

where \(Var[X]\) means the variance of the random variable \(X\).

Its important to note than many types of random variables can be remodeled into a Bernoulli distribution. Take for example a random variable \(Z\) which assigns the numbers \(0\), \(1\), or \(2\), to people who have one of three categories of pets: cats, dogs, or fish, respectively. The following table gives the probability distribution of \(Z\):

| Z | Probability |

|---|---|

| 0 | \(p_1\) |

| 1 | \(p_2\) |

| 2 | \(p_3\) |

If we are interested in whether a single randomly sampled individual has a dog as a pet, then we may consider having a dog as the “successful” outcome and all other outcomes as “failures”. Then the random variable \(Y\) assigns a value of \(1\) to an individual who has a dog as a pet with probability \(p_2\) and a value of \(0\) to an individual who does not have a dog with probability \(1 - p_2 = p_1+p_3\). Thus the random variable \(Y\) follows a Bernoulli distribution:

\[ Y \sim Bernoulli(p_2) \]

The binomial distribution

The binomial distribution is probably the most widely used discrete probability model. A binomial random variable counts the number of “successful” outcomes out of \(n\) independent trials where each trial has probability of success \(p\). Like the Bernoulli random variable, the binomial distribution deals with dichotomous outcomes. In fact, a binomial random variable is a sum of \(n\) independent draws from a Bernoulli distribution. Thus the defining characteristics of a binomial distribution are

There is a basic random process with two possible outcomes denoted as “success” or “failure”

There are \(n\) independent repetitions of the random process

the probability of success \(p\) is constant all observations of the random process

Thus the number of trials \(n\) and the probability of success \(p\) are parameters of the binomial distribution. A random variable \(X\) which follows the binomial distribution is denoted mathematically as

\[ X \sim Binom(n, p) \]

Typical applications of the binomial distribution involve experimental outcomes such as the number of patients who recovered from illness while taking an experimental drug. Or the number of salmon that successfully spawn in a creek on a given day of the week.

The mean and variance of the binomial distribution are given by:

\[ E[X] = np \] \[ Var[X] = np(1-p) \]

The binomial distribution has the following probability mass function:

\[ P(X = k) = \binom nk p^k (1-p)^{n-k}\]

The first quantity is called the binomial coefficient and counts the number of ways to arrange \(k\) successes among \(n\) independent trials. Mathemtically it is defined as

\[ \binom nk = \frac{n!}{k!(n-k)!} \]

The symbol \(!\) is called a factorial. In mathematics, a factorial is the product of all positive integers less than or equal to a given positive integer and not including \(0\). \[n! = n \times (n-1) \times (n-2) \times \dots \times 1 \]

For example the quantity \(5!\) is computed as

\[ 5! = 5\times 4\times 3\times 2\times 1\]

It is also important to note that the quantity \(0! = 1\) which is admittedly not intuitive.

To explain in more detail, if we think of the \(n\) trials as a sequence of \(k\) ones (successes) and \(n-k\) zeros (failures), then the binomial coefficient is essentially enumerating and counting all possible permutations of that sequence. Consider the possible sequences of two successes in three trials: there are three possible sequences of successes and failures we could observe:

\[ (1, 1, 0) \\ (1, 0, 1) \\ (0, 1, 1)\]

Using the binomial coefficient we have

\[ \binom 32 = \frac{3!}{2! (3-2)!} = \frac{3\times 2\times 1}{2\times 1 \times 1} = 3 \]

Example: The random variable \(X\) models the number of hits in five at-bats for professional baseball player Luis Arraez of the Miami Marlins who has a hit percentage of \(79\%\). Thus \(X\) is a binomial random variable \[ X \sim Binom(n=5, p=0.79) \]

Compute the probability that Luis Arraez gets one hit in the next five at-bats

Compute the probability that Luis Arraez gets more than 4 hits in the next five at-bats

Lecture 14 Friday Feb. 23rd 2024

The Poisson distribution

The Poisson (pronounced “\(\text{Pwa - s}\tilde o\)”) distribution is named after French Scientist Siméon Denis Poisson (1781–1840). It models the probability that a given number of events occur in a fixed interval of time, assuming the events occur independently of each other and with a known constant mean rate \(\lambda\). Thus, a Poisson random variable has the following characteristics:

The occurrence of one event does not affect the probability that a second event will occur. That is, events occur independently.

The rate at which events occur is constant. The rate cannot be higher in some intervals and lower in other intervals.

Two events cannot occur at exactly the same instant; instead, at each very small sub-interval exactly one event either occurs or does not occur.

the events occur at rate \(\lambda\) - also called the rate parameter - and is striclty positive \(\lambda \in (0,1)\).

The probability mass function is given by

\[ P(X = k) = \frac{\lambda^k e^{-\lambda}}{k!}\]

where \(k\) is the number of events occurring, \(\lambda\) is the rate at which events occur. The quantity \(e\) is called Euhlers number. It is a numerical constant that arises often in mathematics and can be characterized in many different ways. One definition occurs as the limit point of the sequence \((1+1/n)^n\):

\[e = \lim_{n\rightarrow \infty} \left( 1+\frac{1}{n} \right)^n \approx 2.7183 \]

virtually all calculators will have a natural exponentiation button for this quantitiy which is defined as

\[ e = \exp(1) \]

The expected value and variance of a Poisson random variable are both given by the rate parameter \(\lambda\)

\[ E[X] = \lambda\] \[ Var[X] = \lambda\]

The Poisson distribution has a wide range of applications across scientific disciplines including finance, genetics, infectious disease, manufacturing and many more. Some examples of the poisson random variables are given below:

The number of mutations on a strand of DNA occurring in a given unit of time

The number of defective products produced per hour of assembly at a factory

The number of bankruptcies filed in a given month

The number of internet connection failures in a given month

Example: Let the random variable \(Y\) represent the number of phone calls placed to a call center each hour during peak customer service hours. Assume that calls typically occur at a rate of \(10\) calls per hour.

What is the probability that the call center receives \(8\) calls in the next hour?

What is the probability that the call center receives fewer than \(2\) calls in the next hour?

Continuous random variables

Recall that continuous random variables have an uncountable number of outcomes. As a result finding probabilities for continuous random variables requires a heavier mathematical treatment. For continuous random variables, the function which assigns probabilities to different outcomes is called a probability density function.

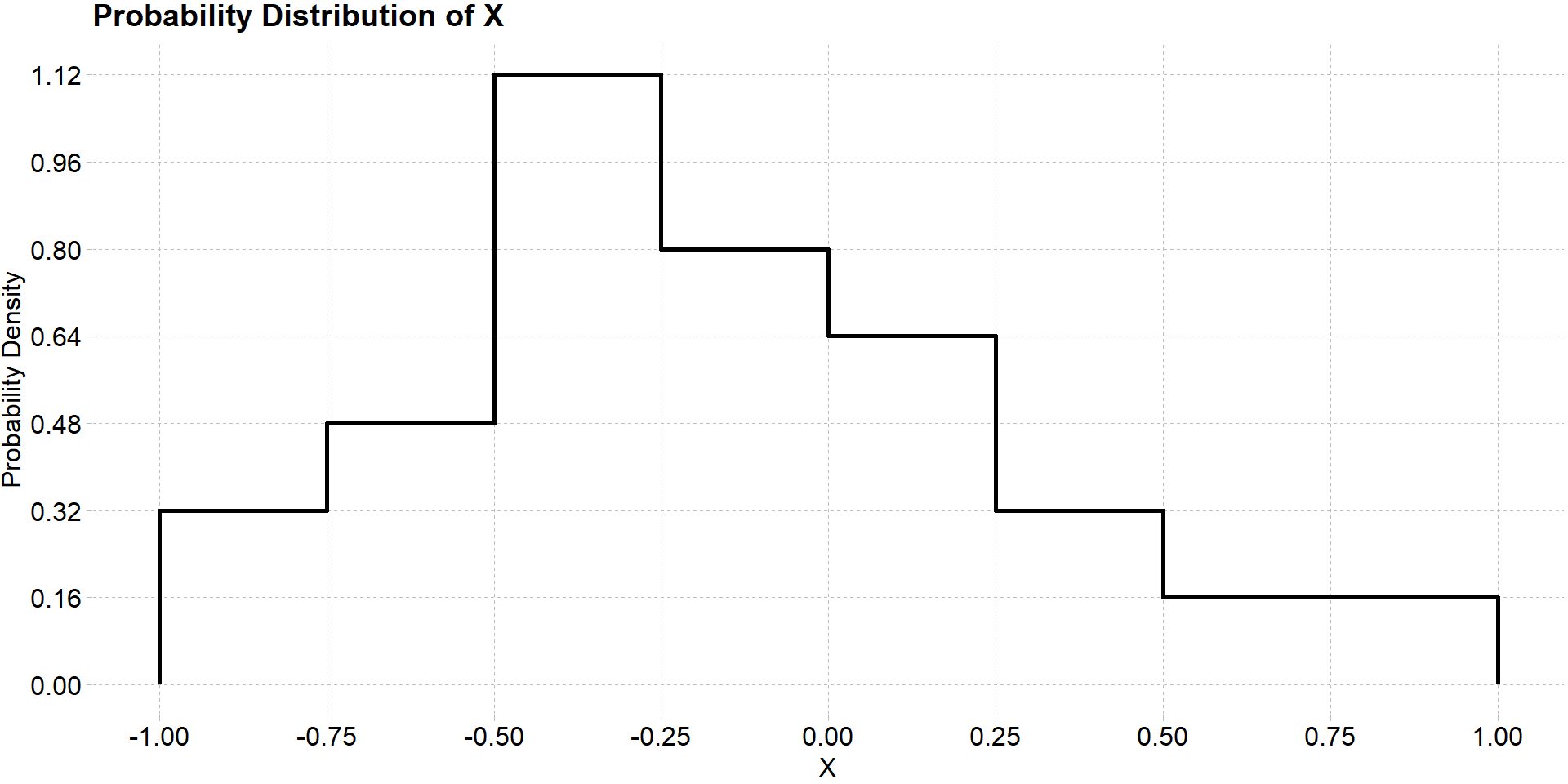

Since we cannot simply list the possible values of a continuous random variable and their probabilities, we have to rely on graphically displaying their distributions as we have done before. Consider the random variable \(X\) with probability density function \(f(x)\) given below

For a continuous distribution we cannot compute the probability that \(X\) is equal to some particular value because \(X\) can take on infinite number of values within a range. Thus the probability density is divided among an infinite number of outcomes making the probability of any one outcome effectively zero. We can however consider the probability of that \(X\) takes on a value in some sub-interval of its domain. For example, we can compute the probability \(P(X<0)\). Finding this probability amounts to finding the area underneath the probability density curve of \(X\) in the interval \([-1, 0)\).

Finding probabilities from continuous distributions

Normally, the mathematics of calculus would be required to compute this probability and we would need to integrate \(f(x)\) over this range of values. However, in the example above we can manually extrapolate the area under the curve. Notice that the area of the curve is divided into a grid. By finding the area of a single box in the graph, we can multiplying this area by the number of boxes in previously specified range to compute the total area under the curve. For example, the area of a single box is

\[ \text{Area} = 0.25 \times 0.16 = 0.04\]

Now that we know the area of a single box on the plot

\[P(X < 0) = 0.04*17 = 0.68 \]

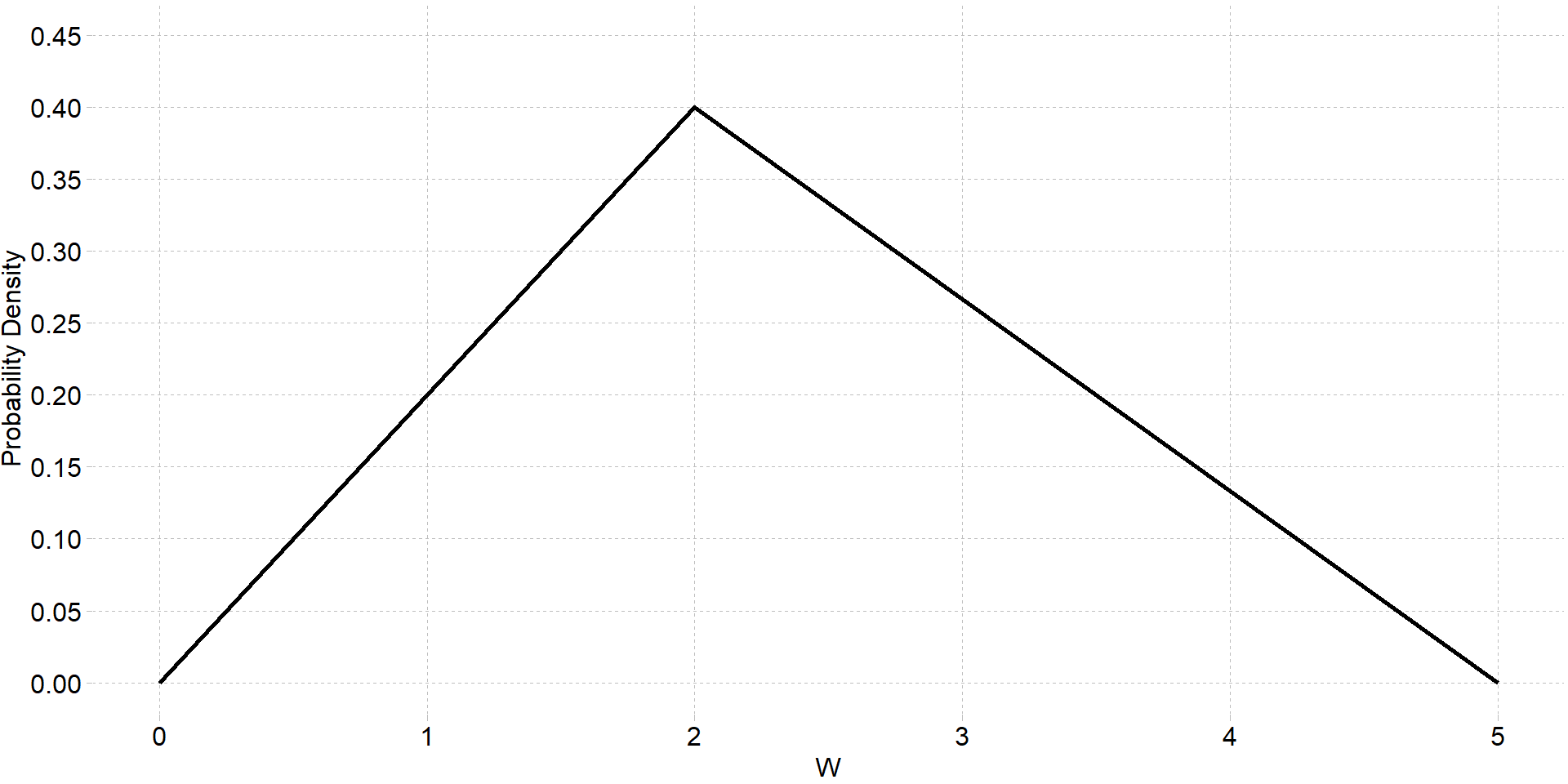

Lets consider another example: Below is the probability density function for a random variable \(W\)

- Find the probability \(P(W \leq 1)\). Again, to solve this we can extrapolate the area under the curve. Since the area between \(0\) and \(1\) of the distribution forms a right-angle triangle we can compute the area using the formula for the area of a triangle:

\[\text{area of triange} = \frac{1}{2}(b \times h) \]

where \(b\) is the base length and \(h\) is the height of the trangle.

\[ P(W\leq 1) = \frac{1}{2}(1\times 0.2) = 0.1 \]

Mean and variance of continuous random variables

- The mean \(\mu\) and variance \(\sigma^2\) of continuous random variable cannot (usually) be defined or computed without calculus but their interpretations are the same as for discrete distributions